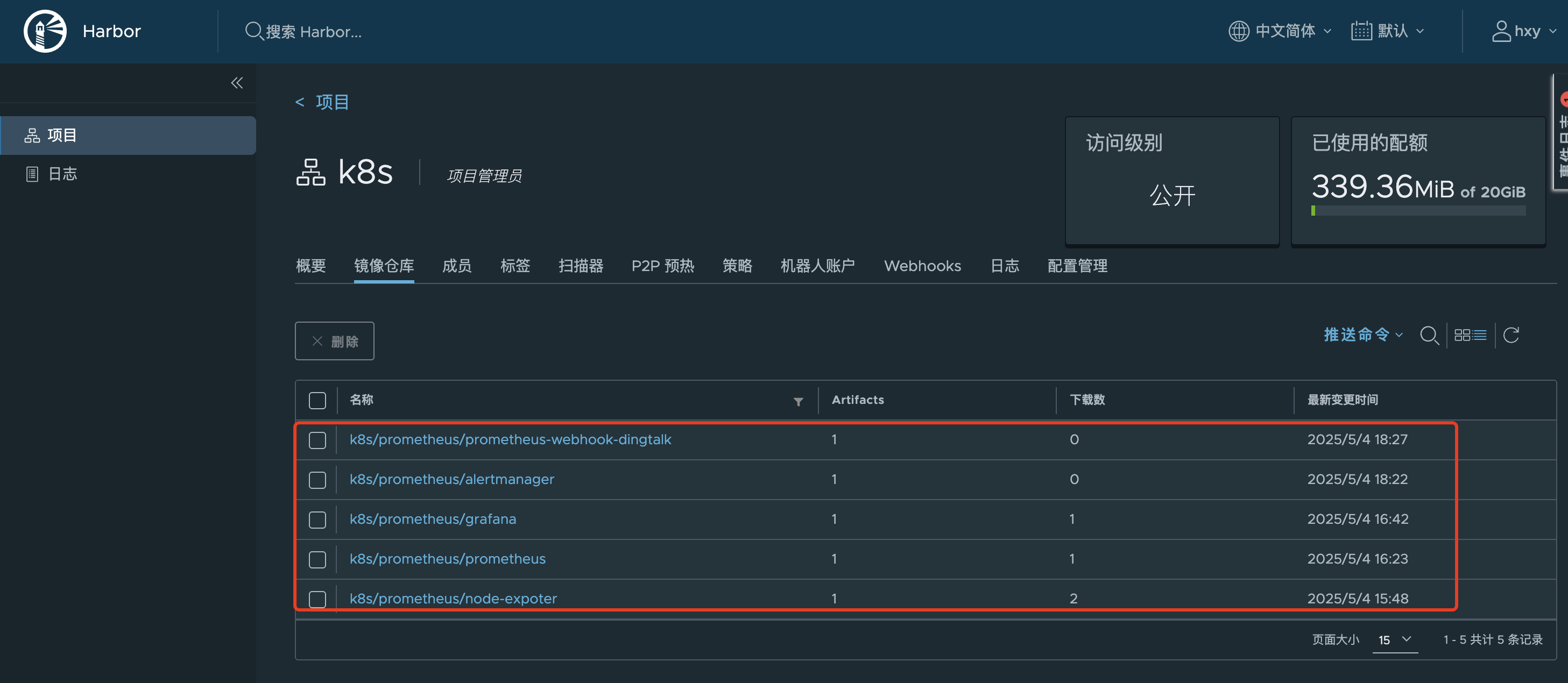

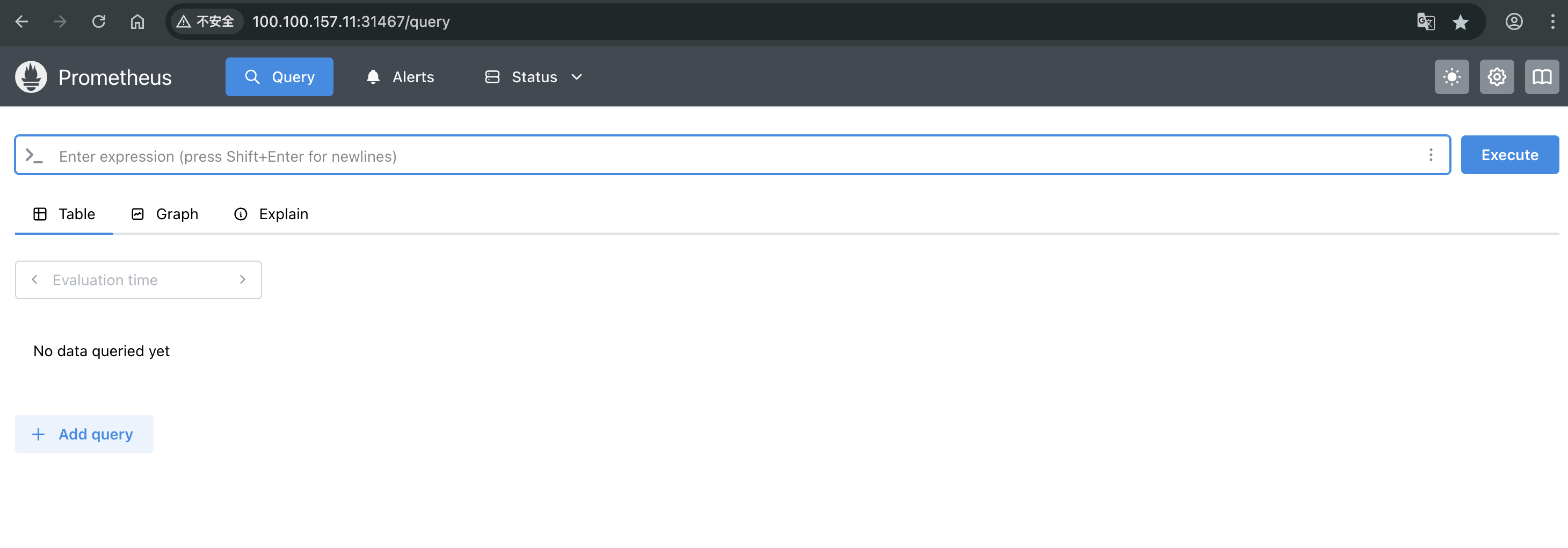

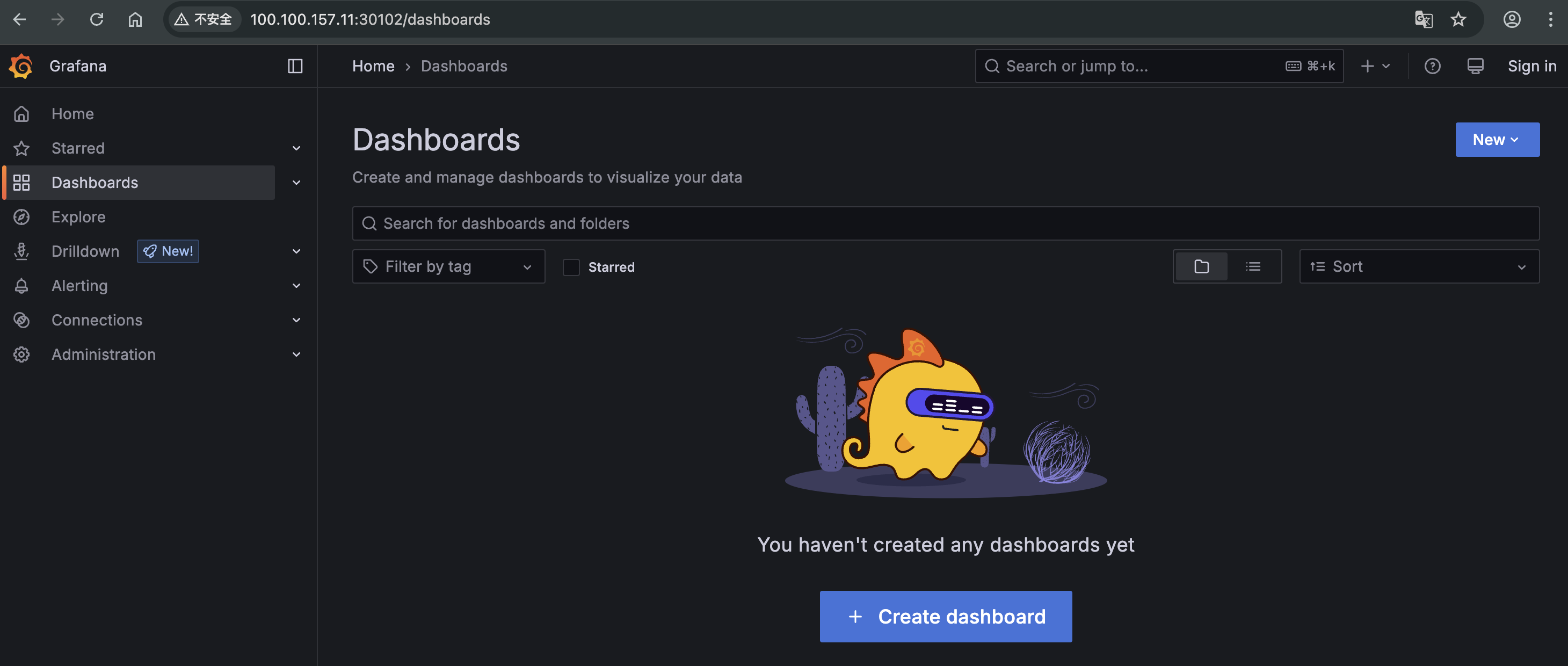

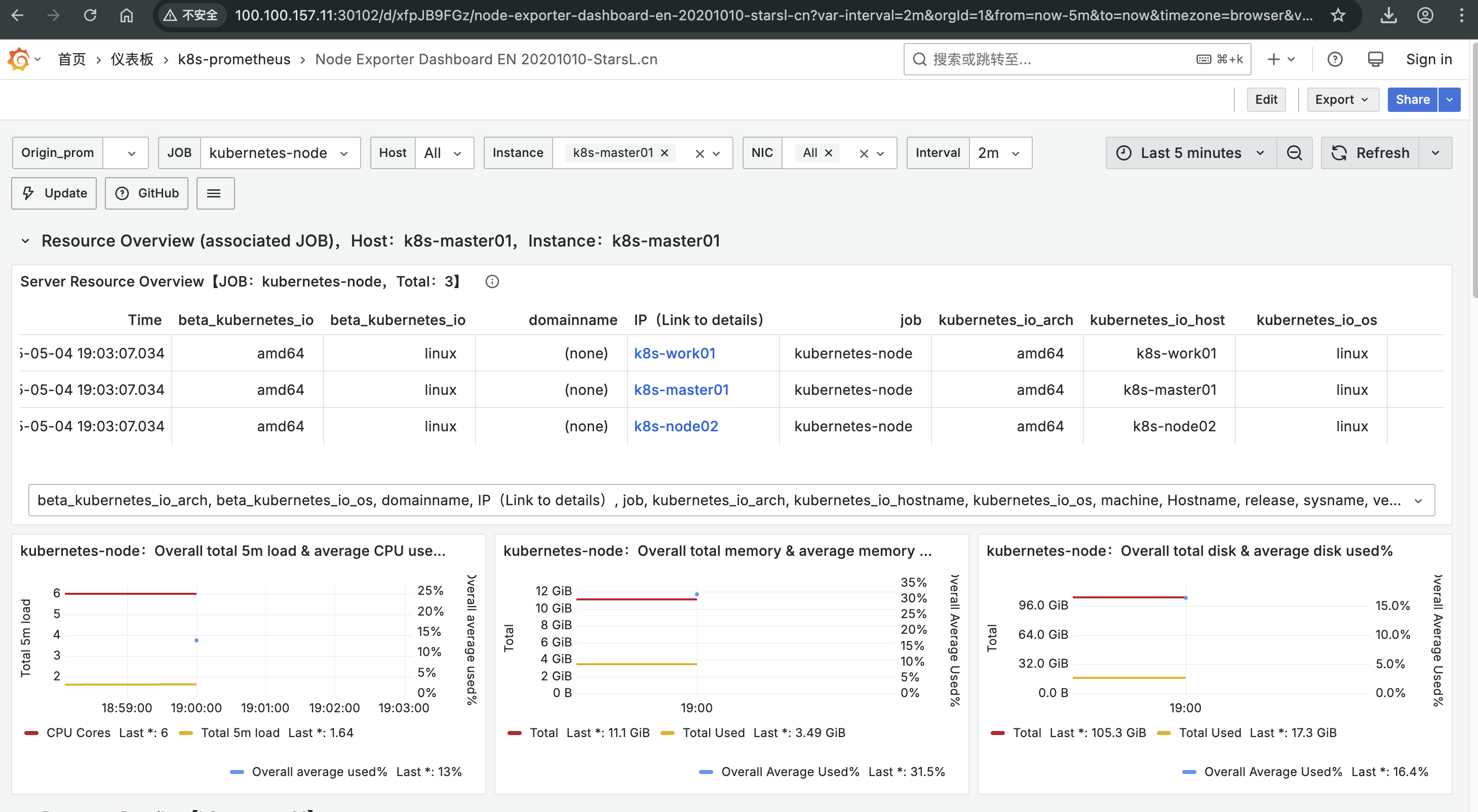

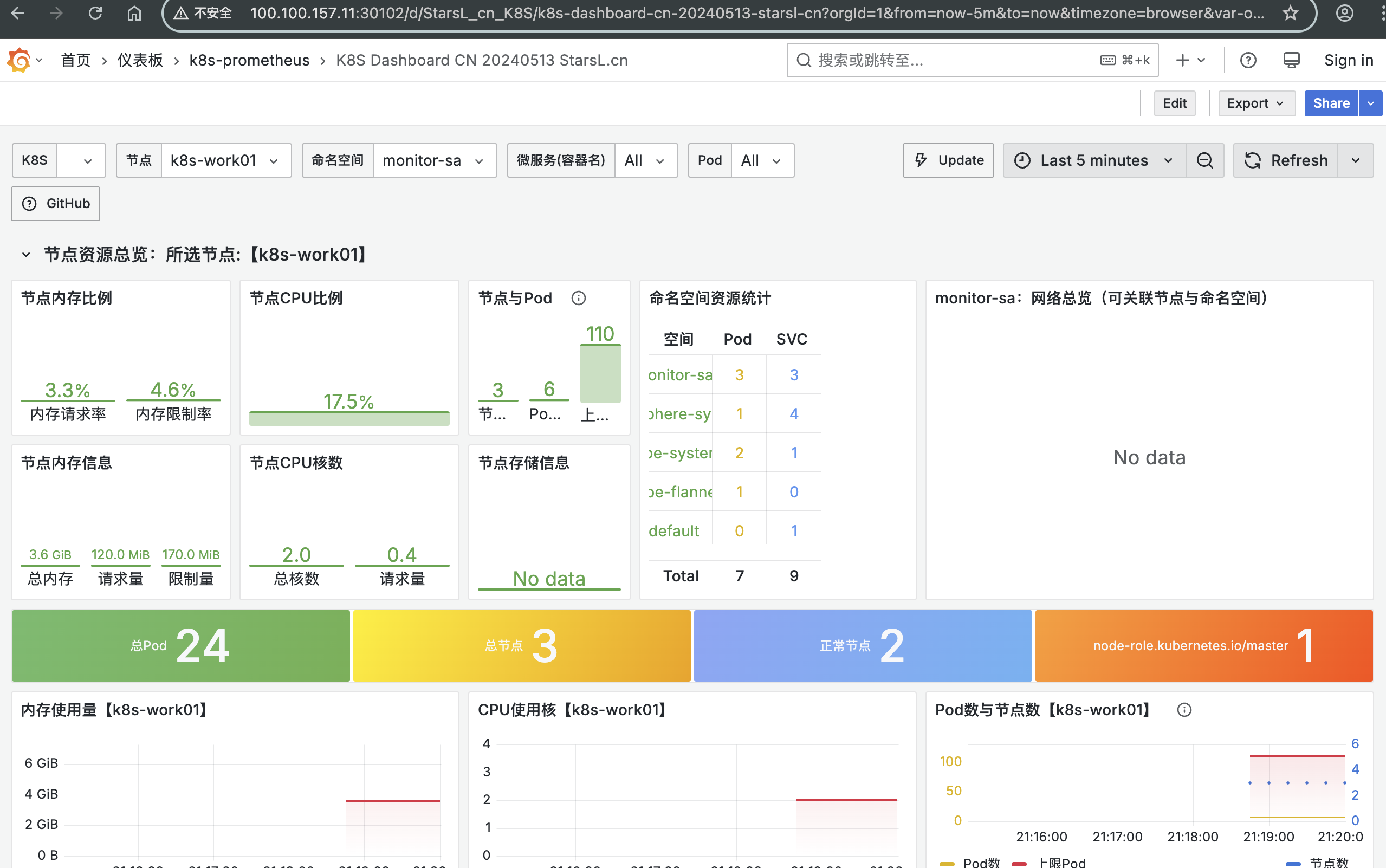

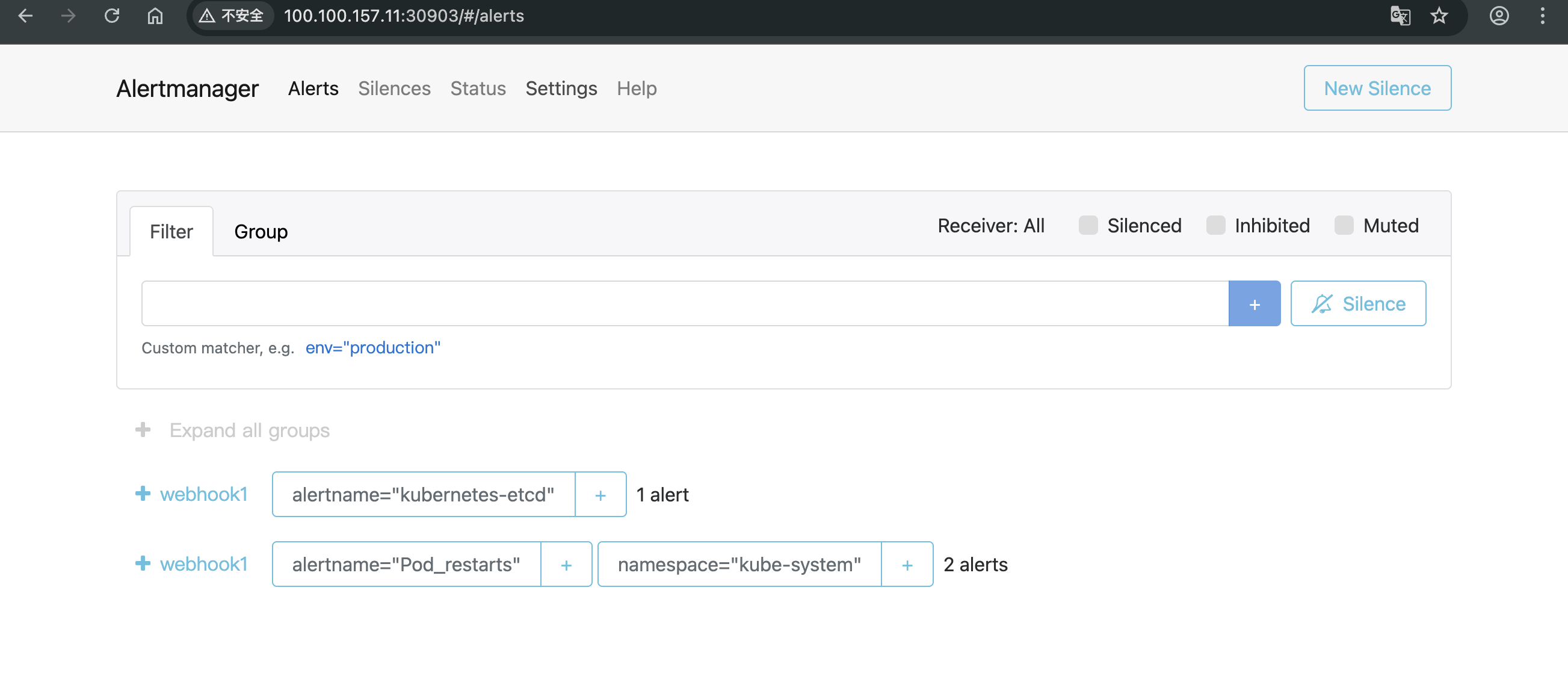

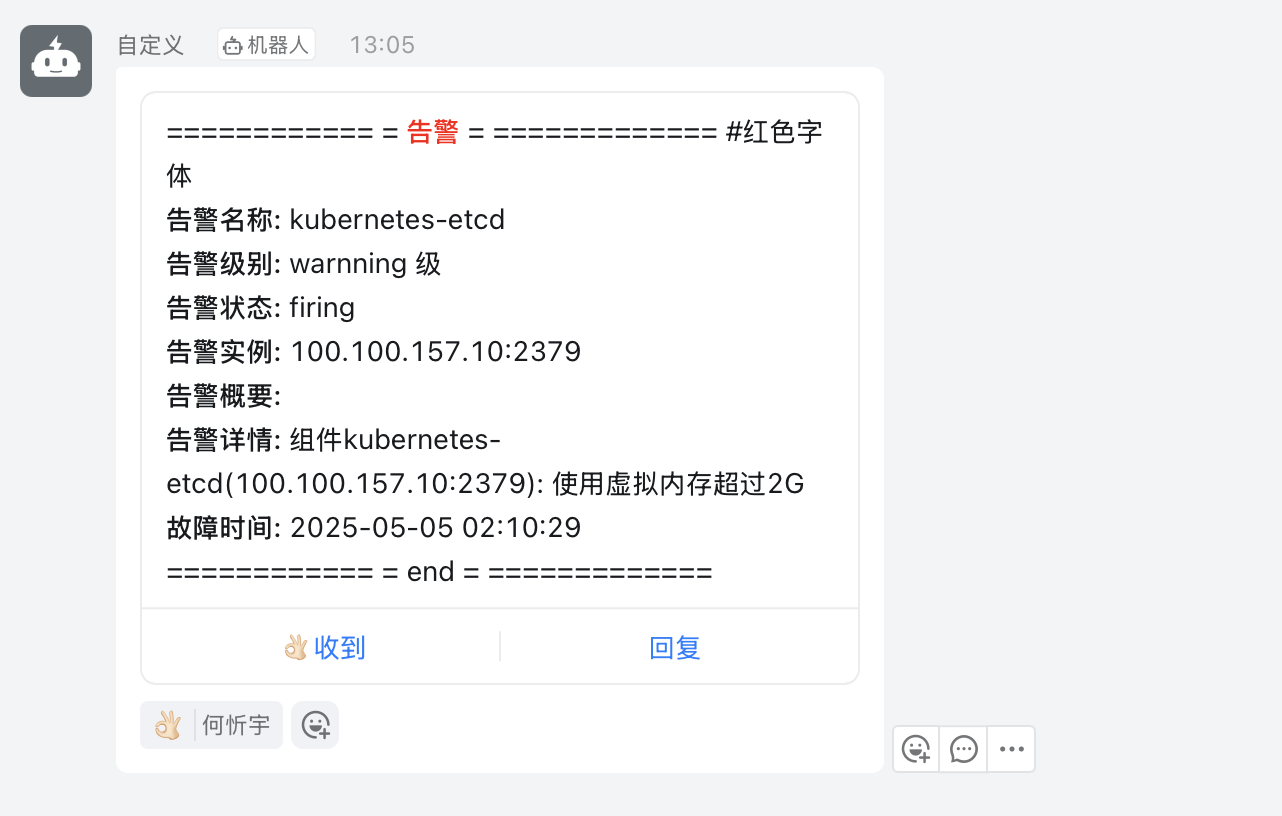

Loading... # Kubernetes构建监控可视化告警平台 ## 一、部署架构规划 > **部署方式采用 `deployment`方式部署** > > **deployment部署流程** > > - 创建configmap > - 创建deployment > - 创建svc ### 1.1 机器角色分配 | 主机名 | IP地址 | 角色 | 命名空间分配 | | ------------ | -------------- | ----------------------------------------------------------- | ------------ | | k8s-master01 | 100.100.157.10 | 操作节点 | `monitor-sa` | | k8s-work01 | 100.100.157.11 | node-expoter/prometheus/grafana/Altermanege | `monitor-sa` | | k8s-node02 | 100.100.157.12 | node-expoter/prometheus-webhook-dingtalk/kube-state-metrics | `monitor-sa` | ### 1.2 官网地址参考 | 官网 | URL | 用途 | 备注 | | --------------------------- | ------------------------------------------------------------------------- | ------------- | -------------------------- | | grafana | [常用模版地址](https://grafana.com/grafana/dashboards/) | dashboard模板 | node-exporter 模版id:11074 | | prometheus-webhook-dingtalk | [github官网](https://github.com/smarterallen/prometheus-webhook-dingtalk) | github代码库 | 使用教程 | ### 1.3 所需镜像软件版本 | 镜像名称 | 皆使用最新版本号 | | --------------------------- | ---------------- | | prometheus | latest | | node-expoter | latest | | grafana | latest | | Altermaneger | latest | | prometheus-webhook-dingtalk | latest | | kube-state-metrics | latest | ### 1.4 安装前环境准备 - pull镜像并push到harbor镜像仓库  ## 二、安装node-exporter ### 2.1 编写node-export.yaml文件并应用 ``` 1.编写node-export.yaml文件 [root@k8s-master01 prometheus]# cat node-export.yaml apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter namespace: monitor-sa labels: name: node-exporter spec: selector: matchLabels: name: node-exporter template: metadata: labels: name: node-exporter spec: hostPID: true #表示pod中的容器可以直接使用主机的网络,与宿主机进行通信 hostIPC: true hostNetwork: true #会直接将宿主机的9100端口映射出来,不需要创建service containers: - name: node-exporter image: 100.100.157.10:5000/k8s/prometheus/node-expoter:latest ports: - containerPort: 9100 resources: requests: cpu: 0.15 #容器运行至少需要0.15核CPU securityContext: privileged: true #开启特权模式 args: - --path.procfs #配置挂载宿主机的路径 - /host/proc - --path.sysfs - /host/sys - --collector.filesystem.ignored-mount-points - '"^/(sys|proc|dev|host|etc)($|/)"' volumeMounts: - name: dev mountPath: /host/dev - name: proc mountPath: /host/proc - name: sys mountPath: /host/sys - name: rootfs mountPath: /rootfs tolerations: - key: "node-role.kubernetes.io/master" operator: "Exists" effect: "NoSchedule" volumes: - name: proc hostPath: path: /proc - name: dev hostPath: path: /dev - name: sys hostPath: path: /sys - name: rootfs hostPath: path: / 2.创建命名空间 kubectl create ns monitor-sa 3.加载文件启动 kubectl apply -f node-export.yaml 4.验证pod创建,验证ip是否与宿主机ip相同 [root@k8s-master01 prometheus]# kubectl get pod -n monitor-sa -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES node-exporter-8sg29 1/1 Running 0 165m 100.100.157.12 k8s-node02 <none> <none> node-exporter-vwvg6 1/1 Running 0 165m 100.100.157.10 k8s-master01 <none> <none> node-exporter-wv9mw 1/1 Running 0 165m 100.100.157.11 k8s-work01 <none> <none> ``` ### 2.2 测试node-exporter能否采集到数据 ``` # 通过curl 宿主机IP:9100/metrics 采集数据 # 我访问的是master节点的CPU [root@k8s-master01 prometheus]# curl 100.100.157.10:9100/metrics | grep node_cpu_seconds % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0# HELP node_cpu_seconds_total Seconds the CPUs spent in each mode. # TYPE node_cpu_seconds_total counter node_cpu_seconds_total{cpu="0",mode="idle"} 480057.49 node_cpu_seconds_total{cpu="0",mode="iowait"} 1090.83 ``` ## 三、prometheus-server安装 ### 3.1 创建sa(serviceaccount)账号,对sa做rabc授权 ``` 1.创建一个 sa 账号 monitor [root@k8s-master01 prometheus]# kubectl create serviceaccount monitor -n monitor-sa serviceaccount/monitor created 2.把 sa 账号 monitor 通过 clusterrolebing 绑定到 clusterrole 上 [root@k8s-master01 prometheus]# kubectl create clusterrolebinding monitor-clusterrolebinding -n monitor-sa --clusterrole=cluster-admin --serviceaccount=monitor-sa:monitor clusterrolebinding.rbac.authorization.k8s.io/monitor-clusterrolebinding created 3.创建数据目录 mkdir /root/hxy/data/prometheus/ chmod 777 /root/hxy/data/prometheus/ ``` ### 3.2 安装prometheus server服务 ``` 1.创建configmap用来存放Prometheus配置信息 [root@k8s-master01 prometheus]# cat prometheus-cfg.yaml --- kind: ConfigMap apiVersion: v1 metadata: labels: app: prometheus name: prometheus-config namespace: monitor-sa data: prometheus.yml: | global: scrape_interval: 15s #采集目标主机监控数据的时间间隔 scrape_timeout: 10s #数据采集超时时间,默认10秒 evaluation_interval: 1m #触发告警检测的时间,默认是1m scrape_configs: #配置数据源,称为target,每个target用job_name命名 - job_name: 'kubernetes-node' kubernetes_sd_configs: #使用的是k8s的服务发现 - role: node #使用node角色,它使用默认的kubelet提供的http端口来发现集群中的每个node节点 relabel_configs: #重新标记 - source_labels: [__address__] #配置的原始标签,匹配地址 regex: '(.*):10250' #匹配带有10250端口的url replacement: '${1}:9100' #把匹配到的 ip:10250 的 ip 保留 target_label: __address__ #新生成的 url 是${1}获取到的 ip:9100 action: replace - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-node-cadvisor' # 抓取 cAdvisor 数据,是获取 kubelet 上/metrics/cadvisor 接口数据来获取容器的资源使用情况 kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap #把匹配到的标签保留 regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-apiserver' kubernetes_sd_configs: - role: endpoints #使用 k8s 中的 endpoint 服务发现,采集 apiserver 6443 端口获取到的数据 scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] #endpoint 这个对象的名称空间,endpoint 对象的服务名,exnpoint 的端口名称 action: keep #采集满足条件的实例,其他实例不采集 regex: default;kubernetes;https - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true # 重新打标仅抓取到的具有 "prometheus.io/scrape: true" 的 annotation 的端点,意思是说如果某个 service 具有 prometheus.io/scrape = true annotation 声明则抓取 - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) #重新设置 scheme,匹配源标签__meta_kubernetes_service_annotation_prometheus_io_scheme 也就是 prometheus.io/scheme annotation,如果源标签的值匹配到 regex,则把值替换为__scheme__对应的值 - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) # 应用中自定义暴露的指标,不过这里写的要和 service 中做好约定,如果 service 中这样写 prometheus.io/app-metricspath: '/metrics' 那么你这里就要 - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 #暴露自定义的应用的端口,就是把地址和你在 service 中定义的 "prometheus.io/port = <port>" 声明做一个拼接,然后赋值给__address__,这样 prometheus 就能获取自定义应用的端口,然后通过这个端口再结合__metrics_path__来获取指标 - action: labelmap #保留下面匹配到的标签 regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace #替换__meta_kubernetes_namespace 变成 kubernetes_namespace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name 2.应用 kubectl apply -f prometheus-cfg.yaml 3.验证 [root@k8s-master01 prometheus]# kubectl get cm -n monitor-sa |grep con prometheus-config 1 163m ``` ### 3.3通过deployment部署prometheus ``` 1.编写yaml文件 [root@k8s-master01 prometheus]# cat prometheus-deploy.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: monitor-sa labels: app: prometheus spec: replicas: 1 selector: matchLabels: app: prometheus component: server #matchExpressions: #- {key: app, operator: In, values: [prometheus]} #- {key: component, operator: In, values: [server]} template: metadata: labels: app: prometheus component: server annotations: prometheus.io/scrape: 'false' spec: nodeName: k8s-work01 serviceAccountName: monitor containers: - name: prometheus image: 100.100.157.10:5000/k8s/prometheus/prometheus:latest imagePullPolicy: IfNotPresent command: - prometheus - --config.file=/etc/prometheus/prometheus.yml - --storage.tsdb.path=/prometheus #旧数据存储目录 - --storage.tsdb.retention.time=3d #何时删除旧数据,默认为 15 天 - --web.enable-lifecycle #开启热加载 ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /etc/prometheus/prometheus.yml name: prometheus-config subPath: prometheus.yml - mountPath: /prometheus/ name: prometheus-storage-volume volumes: - name: prometheus-config configMap: name: prometheus-config items: - key: prometheus.yml path: prometheus.yml mode: 0644 - name: prometheus-storage-volume hostPath: path: /root/hxy/data/prometheus/ type: Directory 2.应用 kubectl apply -f prometheus-deploy.yaml 3.验证查看 [root@k8s-master01 prometheus]# kubectl get deploy -n monitor-sa |grep ser prometheus-server 1/1 1 1 104m ``` ### 3.4给prometheus pod创建一个service ``` 1.编写yaml文件 [root@k8s-master01 prometheus]# cat prometheus-svc.yaml apiVersion: v1 kind: Service metadata: name: prometheus namespace: monitor-sa labels: app: prometheus spec: type: NodePort ports: - port: 9090 targetPort: 9090 protocol: TCP selector: app: prometheus component: server 2.应用 kubectl apply -f prometheus-svc.yaml 3.验证查看 [root@k8s-master01 prometheus]# kubectl get svc -n monitor-sa |grep pro prometheus NodePort 10.100.136.90 <none> 9090:31467/TCP 135m ``` ### 3.5访问测试 > 通过查询可以看到service在宿主机上映射的端口是31467,访问k8s集群的work1节点的IP:端口/graph,就可以访问到web ui界面  ## 四、Grafana安装 ### 4.1编写yaml文件应用 ``` 1.编写yaml文件 [root@k8s-master01 prometheus]# cat prometheus-deploy.yaml --- apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: monitor-sa labels: app: prometheus spec: replicas: 1 selector: [root@k8s-master01 prometheus]# cat grafana.yaml apiVersion: apps/v1 kind: Deployment metadata: name: monitoring-grafana namespace: monitor-sa spec: replicas: 1 selector: matchLabels: task: monitoring k8s-app: grafana template: metadata: labels: task: monitoring k8s-app: grafana spec: nodeName: k8s-work01 containers: - name: grafana image: 100.100.157.10:5000/k8s/prometheus/grafana:latest ports: - containerPort: 3000 protocol: TCP volumeMounts: - mountPath: /etc/ssl/certs name: ca-certificates readOnly: true - mountPath: /var/lib/grafana name: grafana-storage env: - name: INFLUXDB_HOST value: monitoring-influxdb - name: GF_SERVER_HTTP_PORT value: "3000" # The following env variables are required to make Grafana accessible via # the kubernetes api-server proxy. On production clusters, we recommend # removing these env variables, setup auth for grafana, and expose the grafana # service using a LoadBalancer or a public IP. - name: GF_AUTH_BASIC_ENABLED value: "false" - name: GF_AUTH_ANONYMOUS_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ORG_ROLE value: Admin - name: GF_SERVER_ROOT_URL # If you're only using the API Server proxy, set this value instead: # value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy value: / volumes: - name: ca-certificates hostPath: path: /etc/ssl/certs - name: grafana-storage emptyDir: {} --- apiVersion: v1 kind: Service metadata: labels: # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: monitoring-grafana name: monitoring-grafana namespace: monitor-sa spec: # In a production setup, we recommend accessing Grafana through an external Loadbalancer # or through a public IP. # type: LoadBalancer # You could also use NodePort to expose the service at a randomly-generated port # type: NodePort ports: - port: 80 targetPort: 3000 selector: k8s-app: grafana type: NodePort 2.应用 kubectl apply -f grafana.yaml 3.验证查看 [root@k8s-master01 prometheus]# kubectl get pod -n monitor-sa -o wide | grep monitor monitoring-grafana-9fd8c4d46-kmxsc 1/1 Running 0 113m 10.244.1.23 k8s-work01 <none> <none> [root@k8s-master01 prometheus]# kubectl get svc -n monitor-sa NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE monitoring-grafana NodePort 10.103.20.21 <none> 80:30102/TCP 113m ``` ### 4.2 访问测试 > 通过查询可以看到service在宿主机上映射的端口是30102,访问k8s集群的work1节点的IP:端口,就可以访问到grafana web ui界面  ### 4.3 添加监控dashboard模板 - 添加prometheus数据源 - 导入json或dashboard id - node-exporter为例:导入模版id:11074  ## 五、安装kube-state-metrics组件 > 介绍kube-state-metrics组件 > > kube-state-metrics 通过监听 API Server 生成有关资源对象的状态指标,比如 Deployment、Node、Pod,需要注意的是 kube-state-metrics 只是简单的提供一个 metrics 数据,并不会存储这 些指标数据,所以我们可以使用 Prometheus 来抓取这些数据然后存储,主要关注的是业务相关的一 些元数据,比如 Deployment、Pod、副本状态等;调度了多少个 replicas?现在可用的有几个?多 少个 Pod 是 running/stopped/terminated 状态?Pod 重启了多少次?我有多少 job 在运行中 ### 5.1 创建sa并对其授权 ``` 1.创建sa并对其授权 [root@k8s-master01 prometheus]# cat kube-state-metrics-rbac.yaml --- apiVersion: v1 kind: ServiceAccount metadata: name: kube-state-metrics namespace: monitor-sa --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: kube-state-metrics rules: - apiGroups: [""] resources: ["nodes", "pods", "services", "resourcequotas", "replicationcontrollers", "limitranges", "persistentvolumeclaims", "persistentvolumes", "namespaces", "endpoints"] verbs: ["list", "watch"] - apiGroups: ["extensions"] resources: ["daemonsets", "deployments", "replicasets"] verbs: ["list", "watch"] - apiGroups: ["apps"] resources: ["statefulsets"] verbs: ["list", "watch"] - apiGroups: ["batch"] resources: ["cronjobs", "jobs"] verbs: ["list", "watch"] - apiGroups: ["autoscaling"] resources: ["horizontalpodautoscalers"] verbs: ["list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kube-state-metrics roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metrics subjects: - kind: ServiceAccount name: kube-state-metrics namespace: monitor-sa ``` ### 5.2 编写yaml文件并应用 ``` 1.编写yaml [root@k8s-master01 prometheus]# cat kube-state-metrics-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kube-state-metrics namespace: monitor-sa spec: replicas: 1 selector: matchLabels: app: kube-state-metrics template: metadata: labels: app: kube-state-metrics spec: nodeName: k8s-node02 serviceAccountName: kube-state-metrics containers: - name: kube-state-metrics image: 100.100.157.10:5000/k8s/prometheus/kube-state-metrics ports: - containerPort: 8080 2.应用 kubectl apply -f kube-state-metrics-deploy.yaml 3.验证查看 [root@k8s-master01 prometheus]# kubectl get pod -n monitor-sa -o wide |grep state kube-state-metrics-5b689dccc7-7pfsv 1/1 Running 0 7m27s 10.244.2.7 k8s-node02 <none> <none> ``` ### 5.3 创建servcie ``` 1.编写svc yaml文件 [root@k8s-master01 prometheus]# cat kube-state-metrics-svc.yaml apiVersion: v1 kind: Service metadata: annotations: prometheus.io/scrape: 'true' name: kube-state-metrics namespace: monitor-sa labels: app: kube-state-metrics spec: ports: - name: kube-state-metrics port: 8080 protocol: TCP selector: app: kube-state-metrics 2.应用 kubectl apply -f kube-state-metrics-svc.yaml 3.验证查看 [root@k8s-master01 prometheus]# kubectl get svc -n monitor-sa NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-state-metrics ClusterIP 10.105.152.211 <none> 8080/TCP 8m2s ``` ### 5.4 导入k8s pod 监控模版 - 添加prometheus数据源 - 导入json或dashboard id - `kube-state-metrics`为例:导入模版id: 13105  ## 六、安装alertmanager组件 ### 6.1 创建alertmanager-cm.yaml配置文件 ``` 1.创建configmap [root@k8s-master01 prometheus]# cat alertmanager-cm.yaml apiVersion: v1 kind: ConfigMap metadata: name: alertmanager-config namespace: monitor-sa data: alertmanager.yml: | global: resolve_timeout: 15s inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname', 'namespace'] route: group_by: ['alertname', 'namespace'] group_wait: 30s group_interval: 5m repeat_interval: 1h receiver: 'webhook1' routes: - match: severity: 'warning' # 修正为匹配severity标签 receiver: 'webhook1' receivers: - name: 'webhook1' webhook_configs: - url: 'http://100.100.157.12:30071/dingtalk/webhook1/send' send_resolved: true 2.应用 kubectl apply -f alertmanager-cm.yaml ``` ### 6.2 创建alertmanager-deploy ```shell 1.编辑yaml [root@k8s-master01 prometheus]# cat alertmanager-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: alertmanager namespace: monitor-sa labels: app: alertmanager component: server spec: replicas: 1 selector: matchLabels: app: alertmanager component: server template: metadata: labels: app: alertmanager component: server spec: nodeName: k8s-work01 containers: - name: alertmanager image: 100.100.157.10:5000/k8s/prometheus/alertmanager:latest args: - "--config.file=/etc/alertmanager/alertmanager.yml" - "--log.level=debug" ports: - containerPort: 9093 name: alertmanager volumeMounts: - name: alertmanager-config mountPath: /etc/alertmanager - name: localtime mountPath: /etc/localtime volumes: - name: alertmanager-config configMap: name: alertmanager - name: localtime hostPath: path: /usr/share/zoneinfo/Asia/Shanghai 2.应用 kubectl -apply -f alertmanager-deploy.yaml ``` ### 6.4 创建altermanager svc yaml ```shell 1.编辑yaml [root@k8s-master01 prometheus]# cat alertmanager-svc.yaml apiVersion: v1 kind: Service metadata: name: alertmanager namespace: monitor-sa labels: app: alertmanager spec: type: NodePort ports: - port: 9093 targetPort: 9093 nodePort: 30903 # 建议显式指定NodePort范围(30000-32767) protocol: TCP selector: app: alertmanager 2. 应用 kubectl apply -f alertmanager-svc.yaml ``` ### 6.5 创建prometheus和告警规则的配置文件 ```shell 1.# 先删除上次设置的configmap [root@k8s-master01 prometheus]# kubectl delete -f prometheus-cfg.yaml configmap "prometheus-config" deleted 2.新建configmap配置 [root@k8s-master01 prometheus]# cat prometheus-alertmanager-cfg.yaml kind: ConfigMap apiVersion: v1 metadata: labels: app: prometheus name: prometheus-config namespace: monitor-sa data: prometheus.yml: | rule_files: - /etc/prometheus/rules.yml alerting: alertmanagers: - static_configs: - targets: ["100.100.157.12:30903"] global: scrape_interval: 15s scrape_timeout: 10s evaluation_interval: 1m scrape_configs: - job_name: 'kubernetes-node' kubernetes_sd_configs: - role: node relabel_configs: - source_labels: [__address__] regex: '(.*):10250' replacement: '${1}:9100' target_label: __address__ action: replace - action: labelmap regex: __meta_kubernetes_node_label_(.+) - job_name: 'kubernetes-node-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-apiserver' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_scrape - action: replace regex: (.+) source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_path target_label: __metrics_path__ - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_pod_annotation_prometheus_io_port target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace - action: replace source_labels: - __meta_kubernetes_pod_name target_label: kubernetes_pod_name - job_name: 'kubernetes-kube-proxy' scrape_interval: 5s static_configs: - targets: ['100.100.157.10:10249','100.100.157.11:10249','100.100.157.12:10249'] - job_name: 'kubernetes-etcd' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/ca.crt cert_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/server.crt key_file: /var/run/secrets/kubernetes.io/k8s-certs/etcd/server.key scrape_interval: 5s static_configs: - targets: ['100.100.157.10:2379'] rules.yml: | groups: - name: example rules: - alert: kube-proxy的cpu使用率大于80% expr: rate(process_cpu_seconds_total{job=~"kubernetes-kube-proxy"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%" - alert: kube-proxy的cpu使用率大于90% expr: rate(process_cpu_seconds_total{job=~"kubernetes-kube-proxy"}[1m]) * 100 > 90 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%" - alert: scheduler的cpu使用率大于80% expr: rate(process_cpu_seconds_total{job=~"kubernetes-schedule"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%" - alert: scheduler的cpu使用率大于90% expr: rate(process_cpu_seconds_total{job=~"kubernetes-schedule"}[1m]) * 100 > 90 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%" - alert: controller-manager的cpu使用率大于80% expr: rate(process_cpu_seconds_total{job=~"kubernetes-controller-manager"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%" - alert: controller-manager的cpu使用率大于90% expr: rate(process_cpu_seconds_total{job=~"kubernetes-controller-manager"}[1m]) * 100 > 0 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%" - alert: apiserver的cpu使用率大于80% expr: rate(process_cpu_seconds_total{job=~"kubernetes-apiserver"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%" - alert: apiserver的cpu使用率大于90% expr: rate(process_cpu_seconds_total{job=~"kubernetes-apiserver"}[1m]) * 100 > 90 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%" - alert: etcd的cpu使用率大于80% expr: rate(process_cpu_seconds_total{job=~"kubernetes-etcd"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过80%" - alert: etcd的cpu使用率大于90% expr: rate(process_cpu_seconds_total{job=~"kubernetes-etcd"}[1m]) * 100 > 90 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}组件的cpu使用率超过90%" - alert: kube-state-metrics的cpu使用率大于80% expr: rate(process_cpu_seconds_total{k8s_app=~"kube-state-metrics"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过80%" value: "{{ $value }}%" threshold: "80%" - alert: kube-state-metrics的cpu使用率大于90% expr: rate(process_cpu_seconds_total{k8s_app=~"kube-state-metrics"}[1m]) * 100 > 0 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过90%" value: "{{ $value }}%" threshold: "90%" - alert: coredns的cpu使用率大于80% expr: rate(process_cpu_seconds_total{k8s_app=~"kube-dns"}[1m]) * 100 > 80 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过80%" value: "{{ $value }}%" threshold: "80%" - alert: coredns的cpu使用率大于90% expr: rate(process_cpu_seconds_total{k8s_app=~"kube-dns"}[1m]) * 100 > 90 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.k8s_app}}组件的cpu使用率超过90%" value: "{{ $value }}%" threshold: "90%" - alert: kube-proxy打开句柄数>600 expr: process_open_fds{job=~"kubernetes-kube-proxy"} > 600 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600" value: "{{ $value }}" - alert: kube-proxy打开句柄数>1000 expr: process_open_fds{job=~"kubernetes-kube-proxy"} > 1000 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000" value: "{{ $value }}" - alert: kubernetes-schedule打开句柄数>600 expr: process_open_fds{job=~"kubernetes-schedule"} > 600 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600" value: "{{ $value }}" - alert: kubernetes-schedule打开句柄数>1000 expr: process_open_fds{job=~"kubernetes-schedule"} > 1000 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000" value: "{{ $value }}" - alert: kubernetes-controller-manager打开句柄数>600 expr: process_open_fds{job=~"kubernetes-controller-manager"} > 600 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600" value: "{{ $value }}" - alert: kubernetes-controller-manager打开句柄数>1000 expr: process_open_fds{job=~"kubernetes-controller-manager"} > 1000 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000" value: "{{ $value }}" - alert: kubernetes-apiserver打开句柄数>600 expr: process_open_fds{job=~"kubernetes-apiserver"} > 600 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600" value: "{{ $value }}" - alert: kubernetes-apiserver打开句柄数>1000 expr: process_open_fds{job=~"kubernetes-apiserver"} > 1000 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000" value: "{{ $value }}" - alert: kubernetes-etcd打开句柄数>600 expr: process_open_fds{job=~"kubernetes-etcd"} > 600 for: 2s labels: severity: warnning annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>600" value: "{{ $value }}" - alert: kubernetes-etcd打开句柄数>1000 expr: process_open_fds{job=~"kubernetes-etcd"} > 1000 for: 2s labels: severity: critical annotations: description: "{{$labels.instance}}的{{$labels.job}}打开句柄数>1000" value: "{{ $value }}" - alert: coredns expr: process_open_fds{k8s_app=~"kube-dns"} > 600 for: 2s labels: severity: warnning annotations: description: "插件{{$labels.k8s_app}}({{$labels.instance}}): 打开句柄数超过600" value: "{{ $value }}" - alert: coredns expr: process_open_fds{k8s_app=~"kube-dns"} > 1000 for: 2s labels: severity: critical annotations: description: "插件{{$labels.k8s_app}}({{$labels.instance}}): 打开句柄数超过1000" value: "{{ $value }}" 3.应用 [root@k8s-master01 prometheus]# kubectl apply -f prometheus-alertmanager-cfg.yaml configmap/prometheus-config created [root@k8s-master01 prometheus]# kubectl get cm -n monitor-sa NAME DATA AGE alertmanager 1 25m prometheus-config 2 3m20s ``` ## 七、安装prometheus和altermanager ### 7.1 删除上述操作步骤安装的prometheus的deployment资源 ```sh kubectl delete -f prometheus-deploy.yaml deployment.apps "prometheus-server" deleted ``` ### 7.2 生成etcd-certs ```shell [root@k8s-master01 prometheus]# kubectl -n monitor-sa create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/ca.crt secret/etcd-certs created [root@k8s-master01 prometheus]# kubectl get secret -n monitor-sa NAME TYPE DATA AGE default-token-jjw8z kubernetes.io/service-account-token 3 24h etcd-certs Opaque 3 40s monitor-token-jr24f kubernetes.io/service-account-token 3 23h ``` ### 7.3 编写deployment的yaml ```shell 1.编写prometheus deploy yaml [root@k8s-master01 prometheus]# cat prometheus-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: prometheus-server namespace: monitor-sa labels: app: prometheus component: server spec: replicas: 1 selector: matchLabels: app: prometheus component: server template: metadata: labels: app: prometheus component: server spec: nodeName: k8s-work01 serviceAccountName: monitor containers: - name: prometheus image: 100.100.157.10:5000/k8s/prometheus/prometheus:latest imagePullPolicy: IfNotPresent args: - "--config.file=/etc/prometheus/prometheus.yml" - "--storage.tsdb.path=/prometheus" - "--web.enable-lifecycle" ports: - containerPort: 9090 volumeMounts: - mountPath: /etc/prometheus name: prometheus-config - mountPath: /prometheus/ name: prometheus-storage-volume - name: k8s-certs mountPath: /var/run/secrets/kubernetes.io/k8s-certs/etcd/ volumes: - name: prometheus-config configMap: name: prometheus-config - name: prometheus-storage-volume hostPath: path: /root/hxy/data/prometheus/ type: DirectoryOrCreate - name: k8s-certs secret: secretName: etcd-certs ``` ### 7.4验证 ```shell [root@k8s-master01 prometheus]# kubectl get pod -n monitor-sa NAME READY STATUS RESTARTS AGE alertmanager-6746c4b964-fj5sd 1/1 Running 0 10h kube-state-metrics-5b689dccc7-7pfsv 1/1 Running 0 16h monitoring-grafana-9fd8c4d46-kmxsc 1/1 Running 0 20h node-exporter-8sg29 1/1 Running 0 22h node-exporter-vwvg6 1/1 Running 0 22h node-exporter-wv9mw 1/1 Running 0 22h prometheus-server-5875bc5556-9cflt 1/1 Running 0 6m4s [root@k8s-master01 prometheus]# kubectl get svc -n monitor-sa NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE alertmanager NodePort 10.101.13.179 <none> 9093:30903/TCP 14h ```  ## 八、部署webhook钉钉告警 ### 8.1 编辑webhook configmang ```shell [root@k8s-master01 prometheus]# cat dingtalk-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-webhook-dingtalk-config namespace: monitor-sa data: config.yml: |- templates: - /etc/prometheus-webhook-dingtalk/default.tmpl targets: webhook1: url: https://oapi.dingtalk.com/robot/send?access_token=0c05f33c5a85757dcc433bada4fc4c8af88b23b20edd628c96a235736aedf408 secret: SEC50c82183087eea89b8a5eeeb3a3ad9555f5e159f07360a35393ae545db6b4542 mention: all: true message: text: '{{ template "default.tmpl" . }}' default.tmpl: | {{ define "default.tmpl" }} {{- if gt (len .Alerts.Firing) 0 -}} {{- range $index, $alert := .Alerts -}} ============ = **<font color='#FF0000'>告警</font>** = ============= #红色字体 **告警名称:** {{ $alert.Labels.alertname }} **告警级别:** {{ $alert.Labels.severity }} 级 **告警状态:** {{ .Status }} **告警实例:** {{ $alert.Labels.instance }} {{ $alert.Labels.device }} **告警概要:** {{ .Annotations.summary }} **告警详情:** {{ $alert.Annotations.message }}{{ $alert.Annotations.description}} **故障时间:** {{ ($alert.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} ============ = end = ============= {{- end }} {{- end }} {{- if gt (len .Alerts.Resolved) 0 -}} {{- range $index, $alert := .Alerts -}} ============ = <font color='#00FF00'>恢复</font> = ============= #绿色字体 **告警实例:** {{ .Labels.instance }} **告警名称:** {{ .Labels.alertname }} **告警级别:** {{ $alert.Labels.severity }} 级 **告警状态:** {{ .Status }} **告警概要:** {{ $alert.Annotations.summary }} **告警详情:** {{ $alert.Annotations.message }}{{ $alert.Annotations.description}} **故障时间:** {{ ($alert.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} **恢复时间:** {{ ($alert.EndsAt.Add 28800e9).Format "2006-01-02 15:04:05" }} ============ = **end** = ============= {{- end }} {{- end }} {{- end }} ``` ### 8.2 编辑deploy文件 ```shell [root@k8s-master01 prometheus]# cat dingtalk-webhook-deploy.yaml apiVersion: v1 kind: Service metadata: name: dingtalk namespace: monitor-sa labels: app: dingtalk spec: selector: app: dingtalk ports: - name: dingtalk port: 8060 protocol: TCP targetPort: 8060 --- apiVersion: apps/v1 kind: Deployment metadata: name: dingtalk namespace: monitor-sa spec: replicas: 1 selector: matchLabels: app: dingtalk template: metadata: name: dingtalk labels: app: dingtalk spec: nodeSelector: # 关键修改:指定调度到 k8s-node02 kubernetes.io/hostname: k8s-node02 # 使用节点的主机名标签 containers: - name: dingtalk image: 100.100.157.10:5000/k8s/prometheus/prometheus-webhook-dingtalk imagePullPolicy: IfNotPresent args: - --web.listen-address=:8060 - --config.file=/etc/prometheus-webhook-dingtalk/config.yml ports: - containerPort: 8060 volumeMounts: - name: config mountPath: /etc/prometheus-webhook-dingtalk volumes: - name: config configMap: name: prometheus-webhook-dingtalk-config ``` ### 8.3 编辑svc文件 ``` [root@k8s-master01 prometheus]# cat dingtalk_service.yml apiVersion: v1 kind: Service metadata: name: dingtalk namespace: monitor-sa labels: app: dingtalk spec: type: NodePort # 关键修改:改为 NodePort selector: app: dingtalk ports: - name: dingtalk port: 8060 # Service 监听的端口(集群内访问) targetPort: 8060 # 容器端口 nodePort: 30071 # (可选)手动指定 NodePort 范围(30000-32767) ``` ## 九、验证告警  最后修改:2025 年 05 月 06 日 © 允许规范转载 打赏 赞赏作者 支付宝微信 赞 3 如果觉得我的文章对你有用,请随意赞赏